Sometimes content will simply be removed. In practice, often an American content host will let NCMEC know, since they compile and forward on reports to law enforcement. This means different things depending on the type of content, the country, and lots of other moving parts, but it is the "safety of the children" argument at work. If the reason for your report is that the content involves child abuse, then the company is generally accountable for checking it out. You might have once reported a post on facebook, a file link on dropbox, or an image on imgur (to take a few examples) for being bad in some way. Most companies that host content have a "report" function. A similar flavor of this: someone sent Brian Krebs heroin in the mail, and then "tipped off" the police.

It could be pretty difficult to prove that someone did this if the company's logs were inadequate. They're required by law to report it once they have reasonable suspicion.Īn interesting abuse edge case here I've pondered for a while: someone hacks into your account and intentionally posts bad stuff to get you in trouble.

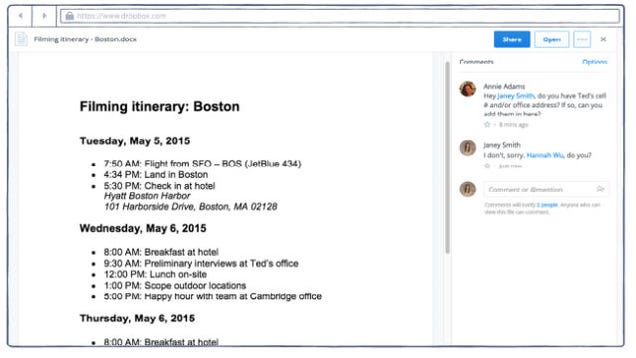

They almost certainly have a human verify the validity before reporting (which is probably a 100% manual process). It's all automated, likely triggered by uploads of certain types of files (images/videos). This isn't "Dropbox employees digging through everybody's files hunting for CP". This isn't a legal mandate, they do this voluntarily. I recall someone getting charged after using Gmail in a similar way. Most service providers (including Facebook and Google) actively scan for known PhotoDNA hashes of CP provided by NCMEC and other groups, and will report if they detect and then verify any of them.

0 kommentar(er)

0 kommentar(er)